Cognitive Services is a service that Microsoft presents us for develop applications and solve problems in AI area. Cognitive Services uses series of Machine Learning algorithms. The purpose of Cognitive Service is to enable application developers to work with machine learning libraries without need for specialists in machine learning in certain areas. All you need to use the service is to basic knowledge about the REST API concept. All services under Cognitive Services can be used with a Rest call and all responses are returned in JSON format.

The Cognitive Services APIs are grouped into five categories

- Decision

- Anomaly Detector ( Preview )

- Content Moderator

- Personalizer

- Language

- Immersive Reader ( Preview )

- Language Understanding

- QnA Maker

- Text Analytics

- Translator Text

- Speech

- Speech to Text

- Text to Speech

- Speech Translation

- Speaker Recognition ( Preview )

- Vision

- Computer Vision

- Custom Vision

- Face Recognition

- Form Recognizer ( Preview )

- Ink Recognizer ( Preview )

- Video Indexer

- Web Search

- Bing Autosuggest, Custom Search, Entity Search, Image Search, Video Search etc.

To use these Service APIs, all you need to do is to have an Azure account and create your service and get your Key.

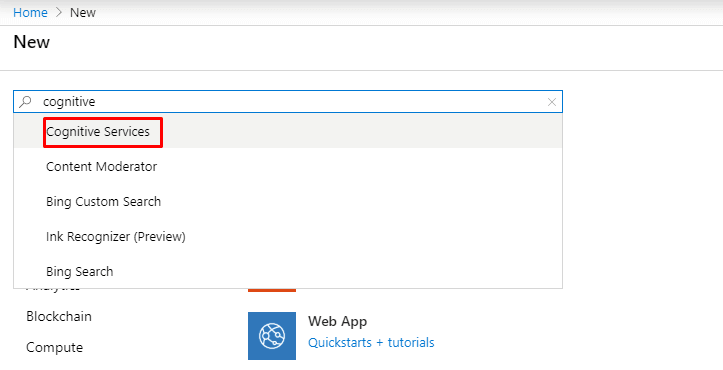

1 – By entering portal.azure.com , we are pressing to create a new resource

2 – We create our service by writing Cognitive Services

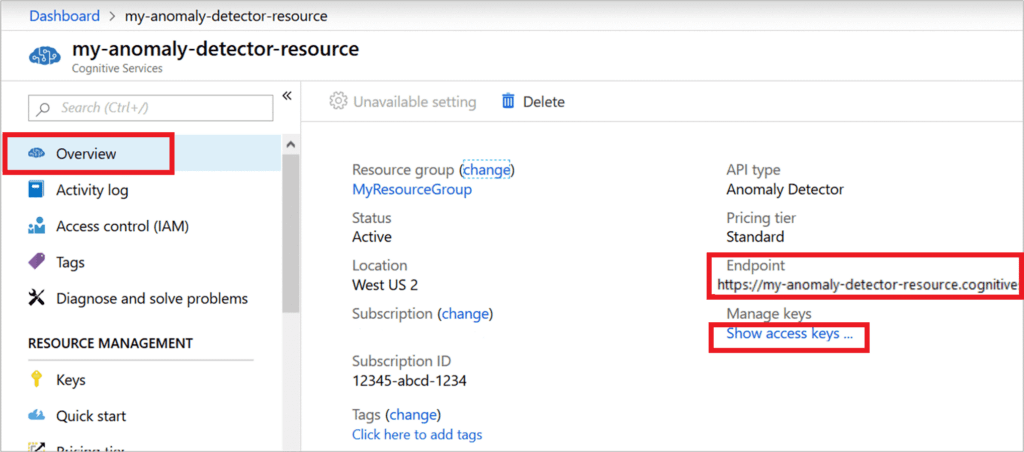

3 – After our service created, we can get our Endpoint and Key from the overview page of service or from the keys page on the left pane and we are ready to create our application.

In this article, there is a service that I would like to mention; Face API

The biggest feature of Face API is providing us to algorithms that used for detect, recognize and analyze human faces in images. This algorithm, which creates a unique identity for us by looking at 27 points on the faces that it analyzes, can tell us who owns the face by giving a unique id parameter. If we teach who the face belongs in the image to service, it will recognize it in every subsequent call. In addition to telling us who the face belongs to service can analyze, age estimation, hair color, emotion analysis ( 90% happy, 5% surprised), gender, glasses (reading – sun ), makeup ratio on the face even this makeup is lip or eye makeup.

By using this information, we do not need to develop software separately to store the person name and compare the persons with each other in our application. The library of the eservice offers us the functions that contain them directly for us.

I believe that the scenarios are really open-ended after this service is launched. If you want, you can build a door entry system through a camera placed at the door entrance, or you can measure your customers’ feelings from the cameras located behind the cashpoints of the stores.

When we send a photo to Face API as a request, the result it returns to us in Json format like the example below;

Algılama sonucu:

detection_01

JSON:

[

{

"faceId": "ee499927-3c99-4d38-94cb-478d5248a6ce",

"faceRectangle": {

"top": 128,

"left": 459,

"width": 224,

"height": 224

},

"faceAttributes": {

"hair": {

"bald": 0.1,

"invisible": false,

"hairColor": [

{

"color": "brown",

"confidence": 0.99

},

{

"color": "black",

"confidence": 0.57

},

{

"color": "red",

"confidence": 0.36

},

{

"color": "blond",

"confidence": 0.34

},

{

"color": "gray",

"confidence": 0.15

},

{

"color": "other",

"confidence": 0.13

}

]

},

"smile": 1.0,

"headPose": {

"pitch": -13.2,

"roll": -11.9,

"yaw": 5.0

},

"gender": "female",

"age": 24.0,

"facialHair": {

"moustache": 0.0,

"beard": 0.0,

"sideburns": 0.0

},

"glasses": "ReadingGlasses",

"makeup": {

"eyeMakeup": true,

"lipMakeup": true

},

"emotion": {

"anger": 0.0,

"contempt": 0.0,

"disgust": 0.0,

"fear": 0.0,

"happiness": 1.0,

"neutral": 0.0,

"sadness": 0.0,

"surprise": 0.0

},

"occlusion": {

"foreheadOccluded": false,

"eyeOccluded": false,

"mouthOccluded": false

},

"accessories": [

{

"type": "glasses",

"confidence": 1.0

}

],

"blur": {

"blurLevel": "low",

"value": 0.0

},

"exposure": {

"exposureLevel": "goodExposure",

"value": 0.48

},

"noise": {

"noiseLevel": "low",

"value": 0.0

}

},

"faceLandmarks": {

"pupilLeft": {

"x": 504.8,

"y": 206.8

},

"pupilRight": {

"x": 602.5,

"y": 178.4

},

"noseTip": {

"x": 593.5,

"y": 247.3

},

"mouthLeft": {

"x": 529.8,

"y": 300.5

},

"mouthRight": {

"x": 626.0,

"y": 277.3

},

"eyebrowLeftOuter": {

"x": 461.0,

"y": 186.8

},

"eyebrowLeftInner": {

"x": 541.9,

"y": 178.9

},

"eyeLeftOuter": {

"x": 490.9,

"y": 209.0

},

"eyeLeftTop": {

"x": 509.1,

"y": 199.5

},

"eyeLeftBottom": {

"x": 509.3,

"y": 213.9

},

"eyeLeftInner": {

"x": 529.0,

"y": 205.0

},

"eyebrowRightInner": {

"x": 579.2,

"y": 169.2

},

"eyebrowRightOuter": {

"x": 633.0,

"y": 136.4

},

"eyeRightInner": {

"x": 590.5,

"y": 184.5

},

"eyeRightTop": {

"x": 604.2,

"y": 171.5

},

"eyeRightBottom": {

"x": 608.4,

"y": 184.0

},

"eyeRightOuter": {

"x": 623.8,

"y": 173.7

},

"noseRootLeft": {

"x": 549.8,

"y": 200.3

},

"noseRootRight": {

"x": 580.7,

"y": 192.3

},

"noseLeftAlarTop": {

"x": 557.2,

"y": 234.6

},

"noseRightAlarTop": {

"x": 603.2,

"y": 225.1

},

"noseLeftAlarOutTip": {

"x": 545.4,

"y": 255.5

},

"noseRightAlarOutTip": {

"x": 615.9,

"y": 239.5

},

"upperLipTop": {

"x": 591.1,

"y": 278.4

},

"upperLipBottom": {

"x": 593.2,

"y": 288.7

},

"underLipTop": {

"x": 597.1,

"y": 308.0

},

"underLipBottom": {

"x": 600.3,

"y": 324.8

}

}

}

Develop an Example Application

Let’s quickly develop a console application to see how this API works. Let’s start by defining the endpoint and key that we received from the service’s Azure Portal page as follows;

private static string subscriptionKey = Environment.GetEnvironmentVariable("FACE_SUBSCRIPTION_KEY");

private static string uriBase = Environment.GetEnvironmentVariable("FACE_ENDPOINT");

static void Main()

{

string imageFilePath = @"Images\faces.jpg";

HttpClient client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", subscriptionKey);

string requiredParameters = "returnFaceId=true&returnFaceLandmarks=false&returnFaceAttributes=age,gender,headPose,smile,facialHair,glasses,emotion,hair,makeup,occlusion,accessories,blur,exposure,noise";

string uri = uriBase + "/face/v1.0/detect?" + requestParameters;

HttpResponseMessage response;

byte[] byteData = GetImageAsByteArray(imageFilePath);

using (ByteArrayContent content = new ByteArrayContent(byteData))

{

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

response = await client.PostAsync(uri, content);

string contentString = await response.Content.ReadAsStringAsync();

Console.WriteLine("\nResponse:\n");

Console.WriteLine(contentString);

}

}

static byte[] GetImageAsByteArray(string imageFilePath)

{

FileStream fileStream = new FileStream(imageFilePath, FileMode.Open, FileAccess.Read);

BinaryReader binaryReader = new BinaryReader(fileStream);

return binaryReader.ReadBytes((int)fileStream.Length);

}

}The console app above is very simple, showing how we can make a request using a photo and get the answer. You can create your own scenarios by using this service or other services.

References:

https://azure.microsoft.com/tr-tr/services/cognitive-services/

https://azure.microsoft.com/tr-tr/services/cognitive-services/face/

https://blogs.windows.com/windowsdeveloper/2017/02/13/cognitive-services-apis-vision/

https://docs.microsoft.com/tr-tr/azure/cognitive-services/face/overview